As artificial intelligence blurs the line between what’s real and fake, researchers at Arizona State University are working to combat it.

Merriam-Webster defines a “deepfake” as “an image or recording that has been convincingly altered and manipulated to misrepresent someone as doing or saying something that was not actually done or said.”

We’ve had viewers reach out to us about scams they’ve experienced involving deepfakes.

Last year, a Valley mom and dad got an alarming call from an unknown number. The caller said they’d gotten into a car accident with their daughter, then, that they were going to kill her unless the parents paid them money. When the mom asked the caller to put her daughter on the phone, he put a voice on that sounded identical to her daughter’s.

Turned out, the voice had been created with AI, and her daughter was thankfully okay and uninvolved.

Deepfakes have infiltrated politics too, and during an election year, no less.

Authorities issued cease-and-desist orders against two Texas companies they believe were connected to robocalls that used artificial intelligence to mimic President Joe Biden’s voice and discourage people from voting in New Hampshire’s first-in-the-nation primary in January.

Researchers at ASU said it can take just minutes to create a convincing deepfake for ill intent.

It begs the question for many: how do we trust what’s real and what’s fake?

Fulton School of Engineering Associate Dean Visar Berisha and his team created a tool to try to answer that, in some cases, at least.

Berisha leads the development team, which includes fellow ASU faculty members Daniel Bliss, a Fulton Schools professor of electrical engineering in the School of Electrical, Computer and Energy Engineering; and Julie Liss, College of Health Solutions associate dean and professor of speech and hearing science.

“The default to date has been trust,” Berisha said. “I think, moving forward, the default is going to be skepticism.”

His team created new technology through looking at what AI cannot do.

“One thing that is for certain is that humans have a really unique way of producing speech.”

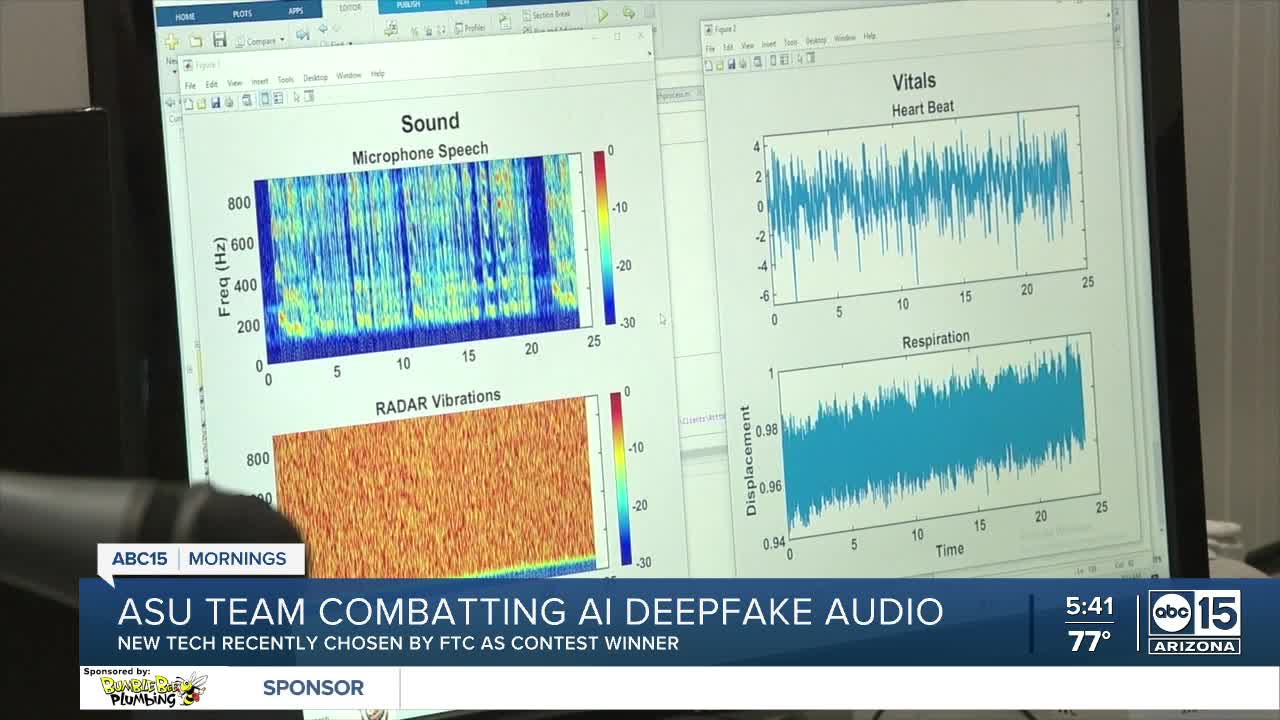

The way we breathe, the way our hearts beat, the way we use our tongues and vocal chords – monitoring them all is called tracking our “biosignals.”

Berisha’s team uses them to prove a recording is human.

“What we’ve developed is a new technology that can authenticate the human origin of speech at the time of recording,” he said.

It uses a radar and a microphone to determine if biosignals are present. The resulting audio gets a watermark embedded in the file verifying its legitimacy. Any future retrieval of the media can then be guaranteed as authentically human to ensure public trust.

The instrument is called “OriginStory,” and the Federal Trade Commission recently chose it as a winner of its Voice Cloning Challenge.

Berisha said the award was validation that this problem needs a solution and that their technology can be used in real-world situations.

“[It can be used in] any sort of high-stakes communication that happens so, for example, media during an election year, or in communications in the defense sector,” he said. “Imagine the FAA talking to airplanes as they’re landing, for example. So, ensuring those communication channels are protected.”

The OriginStory team wants to continue working on their technology to bring it to market and will work with a top executive at Microsoft to do so.

They won a share of $35,000 in prize money from the FTC’s contest.

Source: ABC15 Arizona

Do you have a story or an opinion to share? Email us on: dailyexpressug@gmail.com Or follow the Daily Express on X Platform or WhatsApp for the latest updates.